Late next generation: How are the realistic characters in the VR game made?

Lei Feng Network (search "Lei Feng Network" public concern) Note: This article is directed by Bin Bin, the conductor of VR studio Shortfuse role modeler.

VR is incredible but there is no lesson plan to be found. We hope to share our experience and experience and let more people understand VR, love VR, and join VR. Let the VR siege lions break through the hidden language and regularly bring everyone the easy-to-understand and nutritious VR expertise.

| What is the next generation?The name of the next generation is derived from Japanese, the next era and the future. The so-called next-generation technology refers to advanced technologies that are not yet widely used. Classic time games such as "watchdog", "Battlefield Series", "Call of Duty Series", "GTA Series" and so on have obtained a large number of players fans.

With the birth of VR games, the next-generation gaming experience has achieved a qualitative leap. VR disengages players from traditional games that were developed in 3D and viewed on a 2D screen, and then “in-person†into the game scene to feel the atmosphere in the game. Players can not only watch various scenes, props and characters at close range, but also can experience various dazzling special effects in the game at close range. VR achieves the ultimate dream of the next generation of gamers.

However, the development of VR games still respects the production process of next-generation games. Today's mainstream VR games use editors like Unreal Engine, CryEngine, and Unity, which are the next-generation next-generation engines.

(game character model 360° rotation display)

Compared with traditional games, next-generation games can express high-precision model effects with a reduced number of faces, and various textures and texture maps are displayed based on realistic effects, with a greater emphasis on texture. As the most intuitive experience in the next generation of games, the role of the game, because of the realistic picture effects can produce a unique personality charm, the most vulnerable to the majority of gamers.

(Next Generation Game Characters - Full of Future Technology)

(LOW POLY game image - low polygon combination)

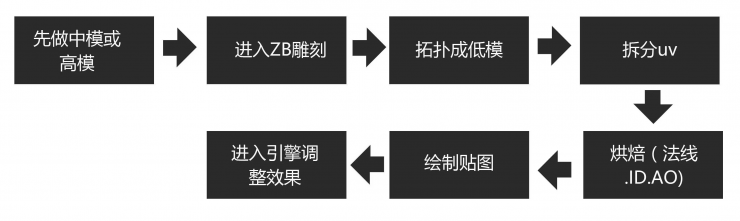

| Cute image is how the next generation of game is made out of?Next-generation game character production will enrich the details of the model through color maps, specular maps, normal maps, self-luminous maps, and many other types of textures to distinguish the texture of different objects, and finally use the engine to render. But to say so, it may be that many non-professional friends will be confused, then we will see the move, step by step analysis. Commanding Bin simply sorted out the production process of VR next-generation game character modeling and some points that need attention.

(Next-generation game production process)

Model making

The production of next-generation game characters is a very tedious process. Making textures like high-model baking is a time-consuming task, and sometimes light adjustments are used for most of the time.

Therefore, in order to realize a happy life without overtime work, we should pay more attention to detail in the production of the first model with as few faces as possible. The principle is that the wiring should be as uniform and reasonable as possible to ensure that the subdivided models can be brushed in ZBrush. Details. To sum up, one sentence is to show the effect of a high model with a streamlined low-level model.

Although the low-precision model made at the beginning may be somewhat similar to the character effects in previous online games. However, in this simple model, the structure of an object can be very general, but the position of all structures must be very, very accurate. This will ensure that the overall shape of the high-precision model produced later is in place.

Keep in mind that the model wiring should be reasonably regulated and save as much as possible. The production of the character must also be coordinated with the proper routing of the animation. Each point must have its own meaning.

Production software: MAYA, 3Dmax, ZBrush, etc.

Enter ZB engraving

With the development of the next generation of games, the production of the characters of the game also ushered in a brand-new production concept. With the advent of digital engraving software such as ZBrush and Mudbox, the hands and minds of artists have been liberated, leaving us to say goodbye to the mechanical model that relies on mouse and parameters.

After the first step of the model construction, the modeled game characters can be imported into ZBrush and other software for engraving high models. This carving step still requires the aesthetic value of the modeler, but it requires talent, accumulation, and cultivation.

Here I give everyone a trick → _ → just start brushing high mode, pay more attention to the feeling of the original painting . Just like drawing a sketch, you first draw out an overall large scale, starting from the whole and continuing to enrich the details (modeling is also such a process). For example, the protagonist of "ConvictVR" is a warrior who is dressed in steel armor and has cool and cool movements. That is, in the process of carving, the characteristics of the characters are captured and different textures are exhibited.

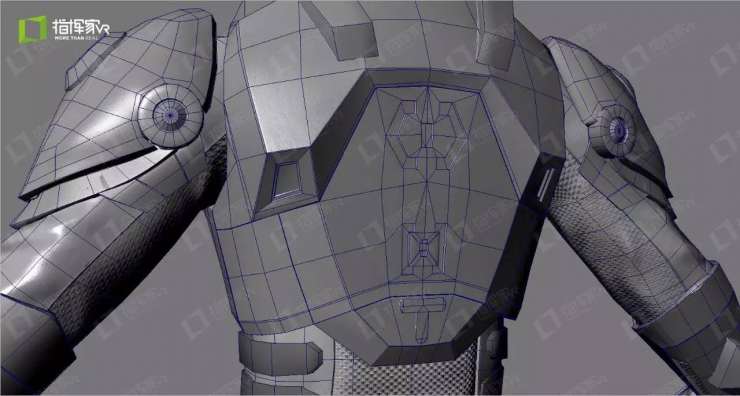

Topology low modulus

The model after ZB engraving is a high model, because too many faces is a test of the performance of the computer, the screen will not only not move the card, the computer configuration will not directly crash, so now we need to do a high subdivision model The topology requires the low modulus.

The low model mainly shows a high-model general shape with a relatively small number of faces, and the details of the structure are mainly reflected by the normal line. Here is a good illustration with a few icons.

(Low-modulus effect, showing low number of low die face)

(Effect of addition line)

(Line diagram of the addition line)

(Effects of addition lines and ao)

(Effects of normals on low-level textures)

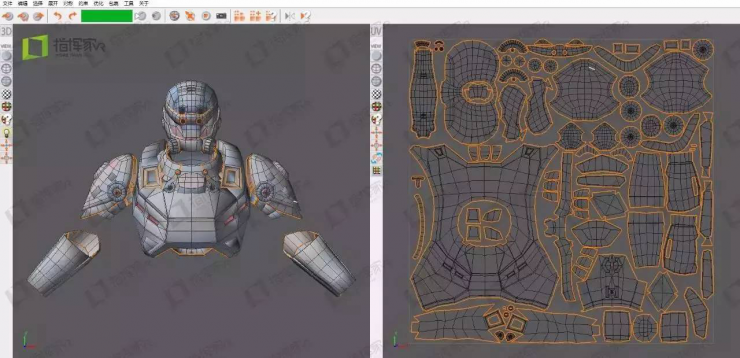

Split UV

There are many sub-systems of UV, which are not listed here. But the ultimate goal is to have the UV display the texture with the largest pixels without stretching. So let's talk about several issues that need to be noticed when splitting UVs.

Split UV precautions

1. Do not remove too much UV

There are some softwares on the market that are already very smart. If they are broken too much, the edges will not show up.

2. UV cutting line in a more hidden place

Cut as much as possible in an invisible place. If the cutting line is in an obvious place, it will affect the texture of the later stage.

3. The image size should be the same

Try to make each UV image the same size, because the size of the UV affects the resolution of the texture. If some UV pixels are large and some pixels are small, the scenes or characters thus produced will be partially clear and partially blurred.

4. Placement should be reasonable

The placement of UV should be reasonable and try to save space. Because this will determine the pixel and quality of the map later.

Production Software: Unfold3D, UVLayout

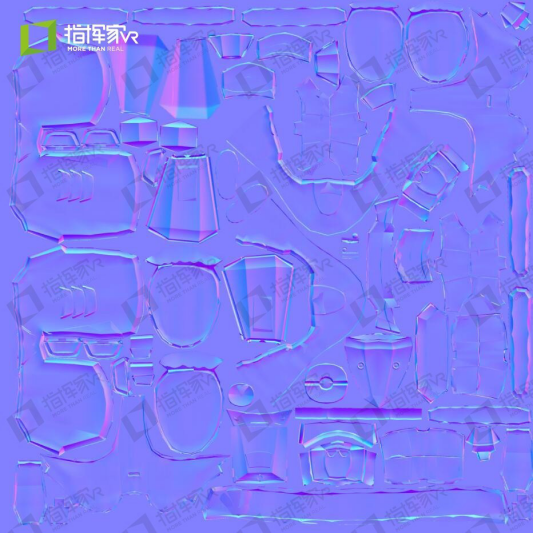

Baking normals

So what is a normal?

The dotted line, which is always perpendicular to a certain plane, is just like a judge because it is fair and selfless, so it is named normal.

We have learned that physics generally knows the angle at which the light is directed at a plane and is usually expressed in terms of light and the normal angle of that point. This means that if we record the normals of all the points on a map, it is not difficult to use this information again to achieve the false bump effect of the later period.

(game image split UV)

Draw a map

Then came the mapping program. What are the characteristics of that generation of mapping?

The next generation map is a set of maps consisting of a combination of a color map, a normal map, a specular map, and a bump map.

The next generation of maps places great emphasis on authenticity. What it creates is a virtual game world. The elements used to construct this imaginary world need to have a certain connection with the real world. Artists must have good aesthetic skills. In addition, it is necessary to have a good observation of this real-world detail and to display interesting elements and details on the map.

Available software includes:

Substance Painter

Quixel SUITE.

Mari.

Bodypaint.

Mudbox etc.

(Mapping merge)

(After putting a color map on a 3D model)

Enter the engine

Once all models and textures have been created, they can be imported into the engine. The texture is connected to the corresponding node, and parameters are adjusted according to the texture of the light on the material. Here, the role modeling task is completed.

There may be doubts about the small partners. This process is just like many VR commercial projects. It is true that many VR commercial projects are made with reference to the next-generation game process, but there is still a big difference between the two. To take a chestnut: Take the VR real estate application that is currently very hot, and the VR next-generation game presents the screen effect is very different. The VR model room is often a brand-new building, restoring the living environment of a better home in the future, so the effect produced is clean and tidy.

(VRoom Evergrande Landscape City Project)

The next generation of game production will restore everything in the real world, such as the worn out old house outside the window, the dirty streets, rusted fighter planes, and some age-old ships.

Well, commanding Bin said that first, so many friends, if there are any questions or suggestions, welcome to leave a message.

Lei Feng Net Note: Reprinted please contact the authorize and retain the source and author, not to delete the content.

ETOP WIREHARNESS LIMITED , https://www.etopwireharness.com