How does AI come in handy in the field of autopilot? You can understand this by reading this

Editor's note: Artificial intelligence technologies based on deep learning architecture (such as deep neural networks, DNN) have long since spread across the globe. Their applications cover the automotive market, computer vision, natural language processing, sensor fusion, object recognition, and automated driving. And other fields. At present, autopilot start-up companies, Internet companies, and OEMs are all exploring the use of graphics processing units (GPUs) in neural networks to push vehicles into the era of autonomous driving as soon as possible. Â

Today, the industry's most advanced advanced driver assistance systems (ADAS) are generally built on integrated or open platforms. To get a smarter, more complex ADAS system and move toward fully autonomous driving, we need to develop, simulate and finally come up with a complete solution. This paper describes the current state of the deep learning architecture based on deep neural networks, which will act as a supercomputer in the car and become the driving core of an integrated vehicle platform. This article mainly introduces the use of artificial intelligence technology in self-driving vehicles.

| What is deep learning?

Deep learning is the most popular method for promoting AI development. It allows machines to understand and understand the world. Neural networks are a large number of simple, trainable mathematical units that can join hands to learn complex movements such as the one mentioned in this article. [3]

Deep learning is still a process in which data is converted into decisions made by computer programs. The biggest difference between it and that algorithm-based system is that once the basic model is built, the deep learning system can learn by itself to complete the set task. [4] The scope of these tasks is also very broad, including tagging pictures, understanding human language, ensuring that drones perform tasks independently and driving vehicles to drive automatically. Deep learning can imitate the learning and cognitive patterns of the human brain, understand language and relationships, and identify ambiguities in discourse. [5]

Neural networks are inherently parallel models, so they are a natural fit with multi-core GPUs, which play an important role in PCs, robots, and vehicles. The GPU can fully release the parallelism of the neural network, which has great advantages in the definition, training, optimization, and layout of the deep learning system. The United States "Popular Science" once wrote that "GPU is the backbone of modern AI technology."[6]

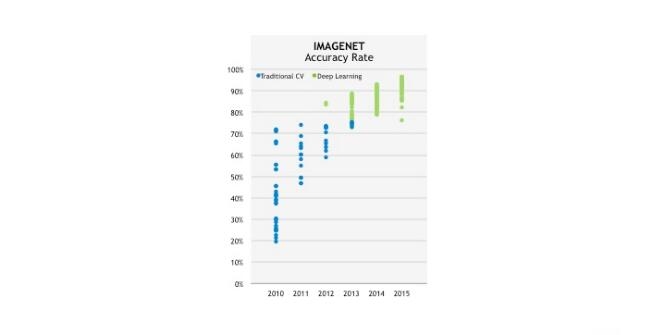

ImageNet

One of the simplest examples of deep learning is ImageNet's massive visual recognition challenge. The challenge will assess the ability of object recognition, pictures, and scene classification algorithms in large-scale picture and video libraries. [7] Before 2012, due to the bottleneck of traditional computer vision algorithms, the accuracy of object recognition improved quite slowly (the recognition rate was lower than 70%). However, the introduction of deep learning technology in 2012 allowed the recognition accuracy to jump up to 80%, but now this number has risen to 95%. Deep learning has completely replaced traditional computer vision algorithms. (See chart 1)

Exhibit 1 : Comparison of Accuracy Between Traditional Computer Vision and Deep Learning in the ImageNet Challenge

| The status of deep learning in the high-tech industry

Social giant Facebook is the first company in the industry to use GPU accelerators to train deep neural networks. Deep neural networks and GPUs play an important role in the new Big Sur computing platform and Facebook's FAI system. Facebook said its goal is to promote the continuous evolution of machine intelligence and find better ways for people to communicate. [8]

Google has also invested heavily in deep learning. TensorFlow is the company's second generation machine learning system, its task is to understand a large number of data and models. Within the framework, TensorFlow can flexibly perform various tasks, such as perception and speech understanding, so it has unique advantages in image recognition and classification, cross-text analysis and so on. Google has dramatically increased its deep learning capabilities with thousands of GPUs, and if it were to be replaced with a similar number of CPUs, it would have only one-tenth the power of a GPU platform. [9]

Anelia Angelova, a researcher at the company responsible for computer vision and machine learning, believes that Google also uses a tandem deep neural network in its self-driving car project to help vehicles detect trajectories of pedestrians on the road. [10]

| Automatic driving circuit

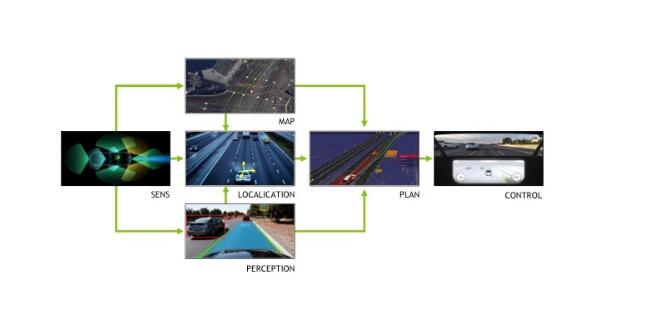

Chart 2: Autopilot Circuit Â

Figure 2 shows us the main components of the autopilot loop. The goal is to provide real-time sensing of 360 degrees around the vehicle with cameras, lidars, and ultrasonic sensors. Through the data collected by these devices, the algorithm can accurately understand the vehicle surroundings and give accurate feedback, including static and dynamic objects around the vehicle. The addition of a deep neural network allows vehicles to greatly improve the detection and classification capabilities of surrounding objects, so the fusion of sensor data becomes more accurate. Subsequently, these processed data will become an important basis for vehicles to sense, locate, and plan routes.

In this complex process,

The first step is called "perception" , which covers the sensor data fusion, object detection, classification, detection and tracking of the character division.

The second step is "positioning," which includes map fusion, landmarks, and GPS positioning. Getting exactly where you are is important for self-driving vehicles because it is one of the preconditions for safe driving. How to integrate high-precision map data is the key to determining the location of a vehicle. Â

The last step is "path planning" which includes the route and behavior of the vehicle. Self-driving vehicles need to safely avoid potential risks in a highly dynamic environment, find a path that is suitable for driving through complex algorithms, and make predictions about changes in the environment. In addition, vehicles need to maintain the stability of the traffic and reduce the interference to passengers and other vehicles. In the path planning, the vehicle needs to take all of the above factors into account and ultimately give a perfect solution.

Therefore, to complete this task, the smart camera in the car can only be paid for, and each step requires the participation of a deep neural network. Through a deep neural network, vehicles must complete the detection and classification of pavement objects, the identification of landmark buildings, and judgments during driving. In addition, the deep neural network is an open platform completely, and each car manufacturer or tier one supplier can come up with their own solutions and prevent the homogenization of their own products and competitors.

| Deep Learning Process

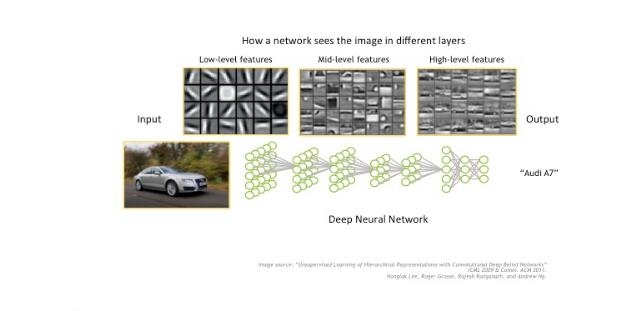

Deep neural networks are formed by the integration of multilayer neural networks. In object recognition, the first layer of neurons will detect various edges, while the second layer of neurons will recognize more complex shapes, such as triangles and rectangles combined by various edges. In the third tier, the ability to identify neurons increases again. Therefore, as long as the neural network framework can be built, many special problems can be solved.

The huge challenge of autopilot technology is that the road conditions in a congested city are very complicated and it is difficult to predict effectively. Therefore, researchers need to organically combine many sensors and data in order to accurately locate the vehicle and sense road conditions, set routes, and control the steering wheel.

Deep learning is simply these difficult nemesis. Right now, a neural network framework similar to Caffe can be selectively studied. Caffe is the work of the Berkeley Visual and Learning Center. It has great advantages in expression, speed, and modularity, and is therefore well suited to take on the challenge of autonomous driving. [11]

Exhibit 3: Object Recognition Process for Deep Neural Networks

After completing the above steps, the specific framework needs special training for special tasks. Object identification and classification are typical examples. Like sports training, a teacher who wants to take a deep neural network needs a coach to escort and guide how it reacts.

The scoring function mentioned in Chart 4 determines the difference between the expected output and the actual output, and this difference is what we call the prediction error. Every neuron in the neural network will have errors, and these errors will become weight information for mutual adjustment between neurons. In this way, in the same way as follows, the response of the neural network will be much more accurate. [12] Under external stimuli, deep neural networks can make the right choices without programmer intervention.

Exhibit 4: Deep neural network training loop

In order to solve the training problem, developers must first build a database with pictures of driving scenes. In addition, they must label the pictures with the correct label or correct driving decision before training. Once the database is set up, the framework model can be configured successfully, and the training problem will be solved.

Subsequently, R&D personnel need to perform off-line testing on neural networks that have completed training under simulated driving conditions. After the verification is completed, it is officially "graduated" and can be brushed into the driving computer (ECU) of a self-driving vehicle for road testing. In addition, the end-to-end system training scheme is similar. Â

Chart 5: Driving Scene

Figure 5 presents a common driving scenario in real life. The perspective in the figure is often seen on the U.S. highway. Researchers will pour the data into a deep neural network-based autopilot system. The window below the image will visualize the various data. The white vehicle in the center of the window has sensed the two cars around. Based on the relative speed, position and other data between the vehicles, the route planning system will select the best route (green line in the figure) and decide whether to change the route according to the situation.

| Nvidia DRIVETM Solutions

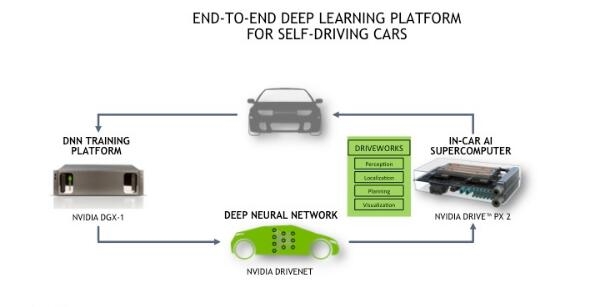

Exhibit 6: End-to-end deep learning platform

Now, Nvidia has come up with an integrated platform that can be used for training, testing, and deployment of autonomous vehicles. The DRIVE solution enables manufacturers, Tier 1 suppliers, and research institutions to increase their strength and flexibility. Based on this, they can create systems for vehicle observation, thinking, and learning. The solution starts with Nvidia's DGX-1, a deep learning supercomputer that trains deep neural networks with data collected from driving. Subsequently, DRIVE PX 2 can make inferences in advance to ensure the safety of vehicles on the road. The connection between the two is Nvidia's DriveWorks, which includes a variety of tools, libraries, and models that greatly enhance the development, simulation, and testing of self-driving vehicles.

DriveWorks can help the sensor to calibrate and acquire the peripheral data and process the data collected by the sensor synchronously through the complex algorithms on the DRIVE PX2.

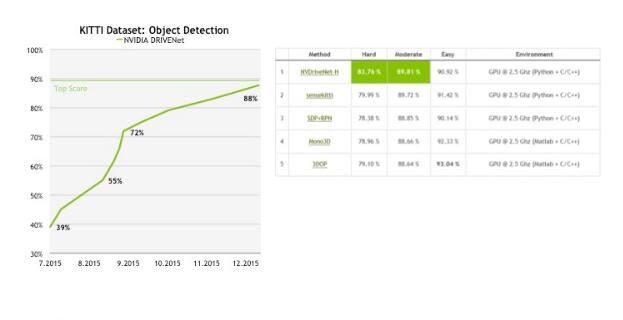

| KITTI Benchmark

Nvidia uses the DRIVE solution to develop its own object recognition system. However, the system also has a neural network framework called DRIVENet as an assistant. In five months, this solution got the highest score on the KITTI benchmark. The most important thing is that DRIVENet can make decisions in real time. It's worth noting that Nvidia's GPUs are extremely powerful and they directly contract the top five scores. This score evaluation system called KITTI was jointly built by Karlsruhe Institute of Technology and Toyota University of Technology. It can determine whether object recognition is effective. [13]

Exhibit 7: KITTI benchmark test scores

Many autopilot companies are already users of Nvidia's deep learning technology, through which they train neural networks by 30-40 times faster. BMW, Daimler, and Ford are users of Nvidia. In addition, Preferred Networks and ZMP, the Japanese start-up company, are also the diehards. In field tests, Audi used this technology to complete deep neural network training within four hours. With the same workload, a smart camera takes 2 years. Volvo will directly put the NVIDIA DRIVE PX 2 into the real car, and they will test it in Gothenburg in the future.

| Outlook for the future

BI Intelligence predicts that by 2020, 10 million vehicles around the world will have a certain degree of autonomy. [12] Many of them need to use AI to perceive the surrounding environment, determine the location of vehicles and deal with complex traffic conditions.

Exhibit 9: Future market growth expectations for vehicles equipped with autopilot [3] Â

Right now, an arms race in the field of automatic driving has been launched and more new companies will be added in the future. At the same time, with the efforts of various companies, the 100+ trip computer solutions on the market will eventually converge.

1. Introduction to deep learning, GTC 2015 Webinar, NVIDIA, July 2015 http://on-demand.gputechconf.com/gtc/2015/webinar/deep-learning-course/intro-to-deep-learning.pdf

2. The Crown Jewel of Technology Just Crushed Earnings, Ophir Gottlieb, Feb 17 2016, Capital Market Laboratorieshttp://ophirgottlieb.tumblr.com/post/139506538909/the-crown-jewel-of-technology-just-crushed

3. Google's release of TensorFlow could be a game-changer in the future of AI, David Tuffley, November 13, 2015, PHYS.ORG http://phys.org/news/2015-11-google-tensorflow-game-changer -future-ai.html

4. Facebook Open-Sources The Computers Behind Its Artificial Intelligence, Dave Gershgorn, December 10, 2015, Popular Sciencehttp://

5. IMAGENET Large Scale Visual Recognition Challenge (ILSVRC), http://LSVRC/

6. Facebook AI Research (FAIR), https://research.facebook.com/ai

7. Google's Open Source Machine Learning System: TensorFlow, Mike Schuster, Google, January 15 2016, NVIDIA Conference, Tokyo

Via automotive-eetimes

Recommended reading:

Tesla killed in car accident was investigated, Google continues to strengthen automatic driving safety |

Apple really does not make a car? Want to do automatic driving software? | New Driving Weekly

Fiber Optic Patch Panel,Fiber Patch Panel,Fiber Distribution Panel,Optical Patch Panel

Cixi Dani Plastic Products Co.,Ltd , https://www.cxdnplastic.com