Introducing the new deep CNN model - NIMA, it can determine which picture is best viewed

Google today published a new deep CNN model, the NIMA (Neural Image Assessment), which can determine which picture looks best at a level close to humans.

The quantification of image quality and aesthetics has been a long-standing problem in image processing and computer vision. The technical quality assessment measures the damage of the image at the pixel level, such as noise, blur, man-made compression, etc., while the art is evaluated to capture the features of the emotion and beauty at the semantic level in the image. Recently, deep convolutional neural networks (CNNs) trained with labeled data have been used to resolve subjective attributes of image quality for particular images, such as landscapes. However, these methods usually simply divide the image into two categories, low quality and high quality, with a narrow range. In order to get a more accurate image quality prediction, our proposed method can not get the same prediction rating, closer to the real rating, more suitable for general images.

In the NIMA: Neural Image Assessment paper, we introduced a deep convolutional neural network that, through training, can determine which of the images that the user considers to be technically skilled and which are attractive. It is with the most advanced deep neural networks that recognize objects that NIMA can understand more categories of objects, no matter what. Our proposed network not only gives the image a reliable score, it is close to human perception, but it can also be used in a variety of labor and subjective tasks, such as smart photo editing, optimizing visual quality, or discovering in pipelines. Visual error.

background

In general, image quality assessment can be divided into full reference and no reference. If the ideal picture as a reference is available, then a measure of image quality such as PSNR, SSIM, etc., will be used. When the reference image is not available, the no-reference method relies on a statistical model to predict image quality. The main goal of both methods is to predict a quality score that is very similar to human perception. When evaluating image quality using a deep convolutional neural network, it is necessary to initialize the weights by training on a data set associated with the object classifier (eg, ImageNet). The annotation data is then fine-tuned for perceptual quality assessment tasks.

NIMA

Usually determining whether an image is attractive is to divide it into two levels. This ignores the fact that each image in the training data is associated with a histogram of human scores rather than a simple two-category. The human evaluation histogram is an indicator for evaluating the overall quality of the image and is the average of all scorers. In our new approach, the NIMA model does not simply divide the image into high or low mass, or regression to get an average score, but instead produces a rating distribution for any given image—from 1 to 10, NIMA. Calculate the likelihood of each score. This is also consistent with the source of the training data, and when compared to other methods, our approach is superior.

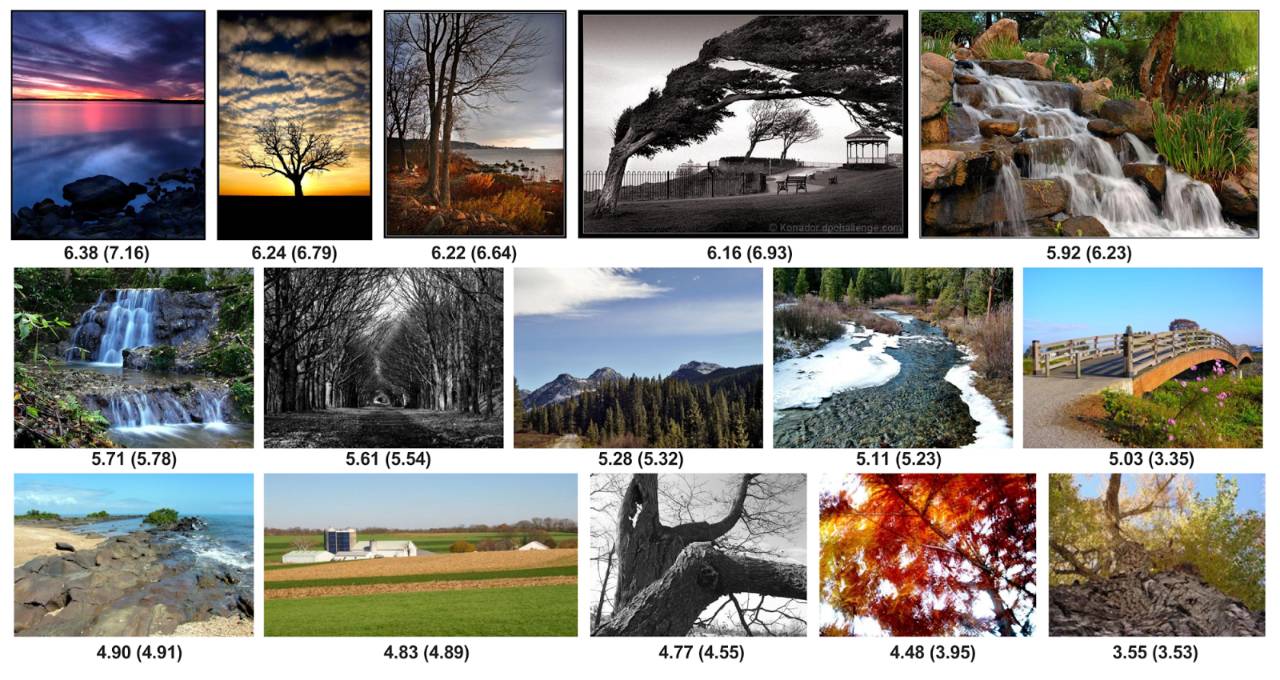

Then, you can use the various functions of NIMA's vector score to arrange the images in terms of attractiveness. The following shows images taken using the NIMA rankings, all from the AVA dataset. In the test, each picture in the AVA was scored by 200 people. After the training, NIMA's aesthetic ranking of these pictures was very close to the average score of the human scorer. We found that NIMA performed equally well on other datasets, predicting image quality and human proximity.

AVA has a picture ranking with the "Landscape" tab. Human scores in parentheses, and NIMA's predicted scores outside the brackets

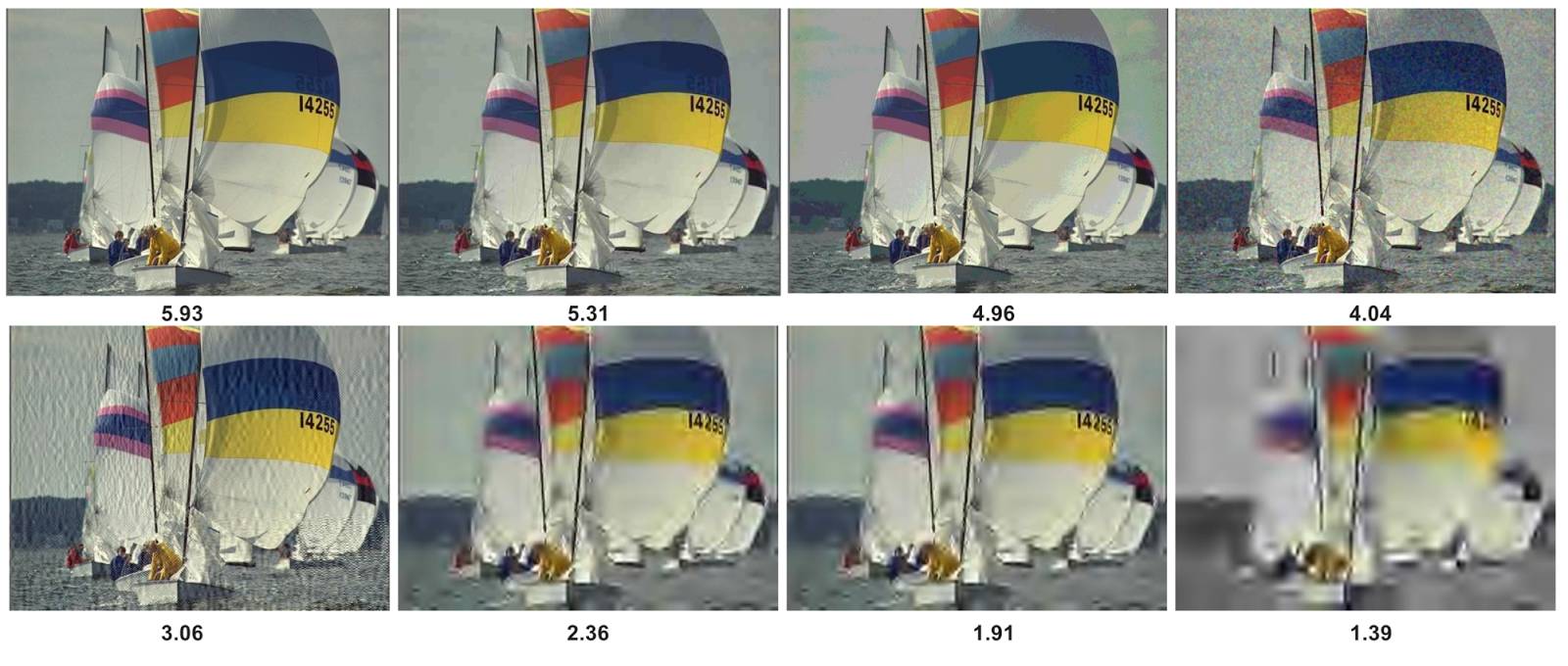

NIMA scores can also compare the quality difference between the distorted image and the original image. The following is part of the TID2013 test set, which includes image distortion of various types and levels.

Perceptual Image Enhancement

As we mentioned in another paper, quality and attractiveness scores can also be used to adjust image enhancement operators. In other words, maximizing the NIMA score as part of the loss function can improve the perceived quality of image enhancement. The example in the figure below shows that NIMA can be used as a training loss to adjust the tone enhancement algorithm. We found that benchmark scores for aesthetics can be improved by comparison adjustments guided by NIMA's scoring guidelines. Therefore, our model is able to direct a deep convolutional neural network filter to find locations near the optimal settings in the parameters, such as brightness, highlights, and shadows.

Change the hue and contrast of the original image with CNN and NIMA

Looking to the future

NIMA results show that machine learning-based quality assessment models can be very versatile. For example, we can make it easy for users to find the best photos and even provide real-time feedback when users take photos. In post-processing, the model can produce better results.

Simply put, NIMA and other similar networks can satisfy humans' aesthetics of images and even video. Although not perfect, it is more feasible. As the saying goes, radish greens have their own love, and each person's evaluation of a photo is also different, so it is very difficult to understand the aesthetic level of everyone. But we will continue to train the test model and look forward to more results.

Encapsulated Transformers are simply transformers in which one or more of the transformer's components are completely sealed. One example of a component is the transformer's coils. This process of encapsulation protects the transformer from dirt, dust, moisture, and any other contaminants.

Epoxy Encapsulated Transformer, PCB Transformer, Low Frequency Transformer, Encapsulated Unit, Electronic Components, Low Voltage Transformer

Shaanxi Magason-tech Electronics Co.,Ltd , https://www.magason-tech.com