How to quickly build a complete mobile broadcast system?

Lei Feng network (search "Lei Feng network" public concern) by: author of this article Jiang Haibing, director of product director, live industry veterans.

The heat of the mobile broadcast industry will continue for a long time, and through integration with various industries, it will become an industry with unlimited possibilities. There are three main reasons:

First, the mobile live UGC production model is more obvious than the PC-side live broadcast. Everyone has equipment and is ready to broadcast anytime and anywhere. It fully complies with the open principles of the Internet era and can stimulate more people to create and distribute high-quality content.

Second, network bandwidth and speed are gradually increasing, and network costs are gradually declining, providing an excellent environment for mobile live broadcasting. Words, sounds, videos, games, etc. will all be presented on the mobile broadcast to create a richer user experience. Live streaming can be accessed in the form of an SDK to its own applications . For example, after-school tutoring in the education field can be conducted in a live broadcast format. E-commerce can also use live streaming to allow users to select products and promote sales.

Third, a mobile broadcast combined with VR/AR technology provides new development space for the future of the entire industry. The VR/AR broadcast enables users to be on the ground, driving the anchor and the audience closer to the real interaction, and greatly improving the user participation of the platform.

At present, Internet professionals who have technical strengths and flow advantages are reluctant to miss the live stream. How to quickly build a live broadcast system has become a concern for everyone. I would like to share my experience with you. I'm working on a live product developer. Our products use a live broadcast SDK from cloud service providers in order to catch up quickly with the market.

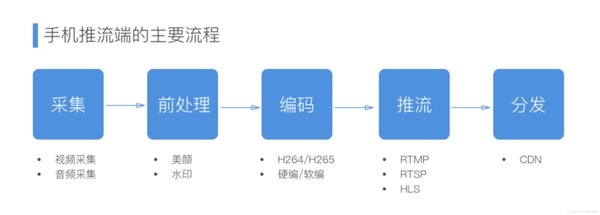

Practitioners all know that a complete live broadcast product should include the following links: push-side (acquisition, pre-processing, encoding, push-flow), server-side processing (transcoding, recording, screenshots, yellowing), player (pulling, Decoding, rendering), interactive system (chat room, gift system, like). I will now describe the work done by the live-streaming SDK in all aspects.

I. What needs to be done to move the live streaming terminal?The live push end is the anchor end, mainly through the mobile phone camera to collect video data and microphone collection audio data, after a series of pre-processing, encoding, packaging, and then pushed to the CDN for distribution.

1, acquisition

The Mobile Broadcast SDK collects audio and video data directly from the phone camera and microphone. Among them, the video sampling data generally adopts RGB or YUV format, and the audio sampling data generally adopts PCM format. The volume of the original audio and video collected is very large, and it needs to be processed through compression to improve the transmission efficiency.

2, pre-treatment

In this link, it mainly deals with effects such as beauty, watermark, and blur. Beauty features are almost a standard feature of live streaming. We found too many cases in our research because no beauty features were abandoned. In addition, the state explicitly proposed that all live broadcasts must be watermarked and played back for more than 15 days.

Beauty is actually using an algorithm to identify the part of the skin in the image and adjust the color value of the skin area. The skin area can be found by comparing the colors, and the color value can be adjusted, a white layer can be added or the transparency can be adjusted to achieve a whitening effect. In the face of beauty treatment, the most famous GPUImage provides a wealth of results, while supporting iOS and Android, support their own writing algorithm to achieve their best results. GPUImage has more than 120 common filter effects built in. Adding a filter requires only a few lines of code.

3, coding

In order to facilitate the streaming, streaming and storage of mobile video, video encoding compression technology is usually used to reduce the video volume. Now the more commonly used video encoding is H.264. In terms of audio, the AAC encoding format is more commonly used. Others such as MP3 and WMA are also optional. The encoding and compression of video greatly improves the storage and transmission efficiency of the video. Of course, the compressed video must be decoded when it is played.

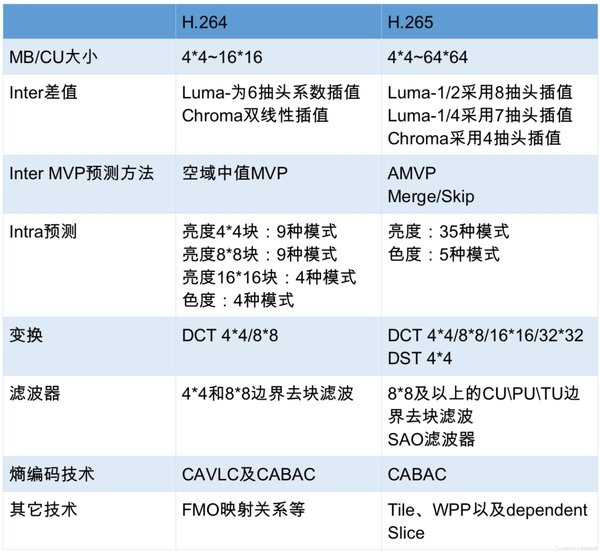

Compared with the previous H.264, the H.265 codec standard that was born in 2012 has been greatly improved, so that only the original half of the bandwidth is required to play the same quality video, and networks below 1.5 Mbps can also transmit. 1080p HD video. Like Ali cloud, Jinshan cloud are pushing their own H.265 codec technology, with the rapid development of broadcast and the dependence on bandwidth, H.265 codec technology has fully replaced the H.264 trend.

H264 and H265 module technology differences:

In addition, hardware coding has become the preferred solution for mobile broadcasts, and video coding with soft-coding processing at 720p or more is very obvious . The compatibility of hardware coding on the iOS platform is relatively good, and can be directly adopted, but on the Android platform, the performance difference of the Media Codec encoder for different chip platforms is still very large, and the cost of fully implementing the full platform compatibility is still very high. .

4, push flow

To use it for push streaming, audio and video data must also be encapsulated using a transport protocol to become streaming data. Commonly used streaming protocols include RTSP, RTMP, and HLS. Delays using RTMP transmission are usually 1–3 seconds. RTMP also becomes the most commonly used stream for mobile live broadcast because of its high real-time requirements. protocol. Finally, the audio and video streaming data is pushed to the network through a certain QoS algorithm and distributed through the CDN. In the live broadcast scenario, network instability is very common. At this time, Qos is required to ensure that users under unstable network conditions watch the live broadcast experience. Usually, the buffer is set by the host and the broadcaster to achieve a uniform code rate. In addition, dynamic bit rates and frame rates are the most commonly used strategies for changing network conditions in real time.

Of course, it is not practical to do all of this on the network. It is best for CDN service providers who provide push services to provide solutions. It is understood that Alibaba Cloud is the only manufacturer in China capable of self-developing CDN cache servers and its performance is very secure. Of course, most of the live broadcast platforms will access multiple video cloud service providers at the same time. This way, you can use pull-stream lines to prepare each other. Optimizing the video streaming after streaming can also improve the fluency and stability of the live broadcast.

Second, the server needs to do what work?In order to adapt to each terminal and platform, the server also needs to transcode the stream, such as supporting RTMP, HLS, FLV and other formats to pull, support the way to multi-channel adaptation of different network and resolution of the terminal equipment.

1, screenshots, recording, watermarks

Cloud service providers such as Alibaba Cloud have provided real-time transcoding technology to convert the user's high streaming rate (such as 720P) into lower-definition (such as 360P) streams in real time to suit the needs of the player. If you want to build your own real-time transcoding system, the cost is extremely high. An 8-core device can only transfer 10 streams in real time. If a normal live broadcasting platform has 1000 streams, 100 devices will be needed, plus the late ones. The cost of operation and maintenance can't be avoided by a general company.

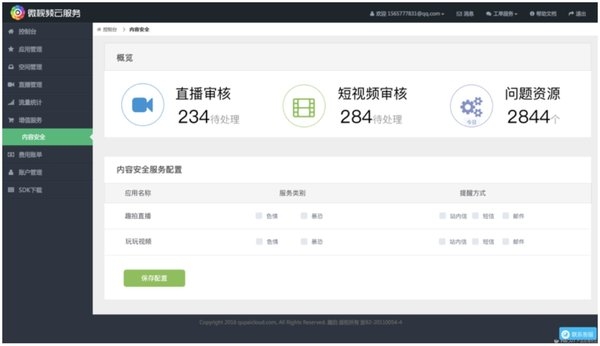

2, Kam yellow

On April 14, 2016, the Ministry of Culture identified bettas, tiger teeth, YY, Panda TV, Liujianfang, and 9158, which were allegedly provided with a live webcast platform for the promotion of obscenity, violence, and abetment, and were listed on the investigation list. The government's involvement in the regulation is conducive to creating a healthy ecosystem for the live broadcast industry and entering into sound development. This also means that in order to ensure the safety of live broadcast products, it is necessary to identify the yellow products. Using technical means to identify the yellow is the inevitable solution for mobile broadcast platforms.

There are two main types of programs available on the market:

The first one is to take a screenshot of the video, then the yellowing of the picture, returning the yellow result and the score. Typical enterprises are Ali (Green Network) and Atlas Technology. They currently support direct incoming video and return results through server-side analysis. The business system is usually used to access the service of identifying services, and the live streaming is controlled based on the results of the yellowing service, such as cutting off the live streaming and blocking accounts.

The second is the combination of CDN and direct analysis of live streaming. The recognition results are classified as pornographic, suspected pornographic, sexy and normal. The business system directly controls the live streaming based on the recognition result. The typical enterprise is Viscovery. The advantage of this solution is that the real-time guarantee is better. The disadvantage is that it must be deployed to the CDN or its own computer room. The use cost is relatively high.

There is also a one-stop live broadcast solution provider. Their approach is that users can perform real-time auditing for each application and every live stream just by configuring the yellowing service at the console. In the console, the cloud service provider will return the results of the yellowing in real time, and the user can directly view the screenshots of the pornographic live broadcast and the offending interface. At the same time, the live stream can be controlled and the live stream of the problem can be cut off. The service provider also provided SMS, e-mail, and post-message functions to avoid losing any illegal video and causing damage to the platform. We used this method.

How to achieve the second player on the player side, to ensure the clarity of the picture and sound in the live broadcast process, stable, process, non-dating live streaming traffic, these jobs require the player to do optimization with the server to do accurate Scheduling.

1, pull flow

Pull flow is actually the reverse process of pushing flow. First, the stream is acquired through the playback end. The standard pull formats include RTMP, HLS, and FLV. RTMP is Adobe's proprietary protocol, open source software and open source libraries are better supported, such as the open source librtmp library, the player can simply play RTMP live as long as the player supports flashPlayer, live broadcast delay is generally 1–3 seconds.

HLS is an HTTP-based streaming media transmission protocol proposed by Apple. HTML5 can be opened and played directly, and can be shared via WeChat, QQ, and other software. Users can also directly watch live broadcasts. It can be said that mobile streaming apps and HLS pull protocols must be supported. The disadvantage is that the delay is usually greater than 10 seconds. The FLV (HTTP-FLV) protocol is a protocol that uses the HTTP protocol to transport streaming media content. It does not have to worry about being kidnapped by Adobe's patent, and the live broadcast delay can also be done in 1–3 seconds.

The differences between the various drawing protocols:

The live streaming technology of the cloud services we use provides the above three formats to meet the needs of different business scenarios. For example, RTMP or FLV formats can be used for live streaming playback for those with high real-time requirements or interactive requirements. For playback or cross-platform requirements, HLS is recommended. Of course, the three protocols can be used at the same time, and they can use their own scenes.

2, decoding and rendering

After the pull-out captures the encapsulated video data, it must be decoded and rendered by the decoder before it can be played on the player. It is the reverse process of encoding and refers to extracting the original data from the audio and video data. The previously described H.264 and H.265 encoding formats are lossy compression, so the original data after extraction is not the original sampling data, and there is a certain loss of information. Therefore, keeping the best original picture through various encoding parameters in the case of the smallest video volume becomes a core secret of each video company.

Considering support for high definition, decoding must still choose hard decoding. As mentioned earlier, the iOS system is relatively single, relatively closed, and supports better. Because the Android system is very different due to differences in the platform, the codec must be fully compatible with all platforms and much work needs to be done.

IV. Interactive System in Mobile BroadcastingThe most common interactions in mobile live broadcast are chat rooms (barrage), likes, rewards, and gifts. The interactive system involves the real-time and interactive nature of the messages. Most of them are implemented using IM functions in technical implementation. For rooms where there are a large number of online users, the amount of barrage messages is very large. The anchors and users cannot actually see them. In order to relieve server pressure, some necessary optimizations must be made in the product strategy.

1, the chat room

The barrage interaction in the mobile live broadcast is the main mode of interaction between the user and the anchor, and is actually the chat room function in the IM. The chat room is similar to the group chat function, but the messages in the chat rooms do not need to be distributed to offline users. Historical messages do not need to be viewed. Users can view chat messages and group member information only after entering the chat room. In the face of complex and ever-changing network conditions, it is also necessary to select near-operator's single-line equipment room access barrage message service according to the user's location so as to make the barrage more timely.

2. Gift system

The gift system is standard on most mobile broadcast platforms. It is the main source of income for these platforms. The form of giving gifts also enhances the interaction between the user and the anchor, and is the main reason why the anchor relies on the platform.

The sending and receiving of gifts is also implemented in the chat room interface in the technical implementation, and is usually implemented by using a custom message in the IM. When the user receives or sends a gift, the gift graphic corresponding to the customized message is rendered.

The above is the experience of live broadcast products that we have summed up after using third-party SDK services. We hope to help entrepreneurs and practitioners.

Nylon Expandable Braided Sleeve

Nylon expandable Braided Sleeve is a type of protective covering used for organizing and protecting wires, cables, and hoses. It is made of nylon material that is woven into a braided pattern, allowing it to expand and contract to accommodate different sizes of cables or wires.

The braided sleeve provides excellent abrasion resistance and insulation, protecting the cables from damage caused by friction, chemicals, or UV rays. It also helps in managing cable clutter and reducing the risk of tangling or snagging.

Nylon expandable braided sleeve is commonly used in industries such as automotive, aerospace, electronics, and telecommunications. It is available in various sizes and colors to suit different applications and can be easily installed by sliding it over the cables or wires.

Overall, nylon expandable braided sleeve is a versatile and durable solution for cable management and protection, providing a neat and organized appearance while ensuring the longevity of cables and wires

Nylon Braided Expandable Sleeving, Nylon Wire Braid Sleeving, Nylon Wire Mesh Sleeve

Dongguan Liansi Electronics Co.,Ltd , https://www.liansielectronics.com